Generate AI Voice from Audio: Voice Cloning Explained

Imagine hearing a familiar voice speak words that were never recorded, yet every pause, tone, and emotion feels authentic. This is no longer science fiction. The ability to generate AI voice from audio using voice cloning technology is transforming how businesses, creators, and educators produce spoken content at scale. From branded voice assistants to multilingual marketing videos, AI-generated voices are rapidly becoming part of everyday digital experiences.

However, alongside the excitement comes confusion. How does AI voice cloning actually work? How much audio is needed? Is it reliable, ethical, and suitable for real business use? In this in-depth guide, we explain voice cloning clearly and practically, cutting through hype to help you understand the technology, its benefits, and its limitations, so you can make informed decisions with confidence.

Introduction to AI Voice Cloning

What Does “Generate AI Voice from Audio” Mean?

To generate AI voice from audio means training an artificial intelligence model to learn the unique characteristics of a human voice from recorded speech and then reproduce that voice saying entirely new content. Unlike traditional text-to-speech systems that rely on generic synthetic voices, voice cloning captures the identity of a real speaker.

The AI analyzes elements such as pitch, rhythm, pronunciation, accent, and vocal texture. Once trained, it can generate new speech from text input that closely resembles the original speaker, even if the person never recorded those sentences.

In simple terms:

- Input: Human voice recordings (audio samples)

- Process: AI learns voice patterns using deep learning

- Output: New speech that sounds like the original speaker

Why AI Voice Cloning Is Gaining Massive Attention

AI voice cloning is not growing by accident. Several global trends are accelerating its adoption:

- Explosion of audio content: Podcasts, audiobooks, video narration, and voice assistants are booming.

- Advances in deep learning: Neural networks now model human speech with unprecedented realism.

- Cost and speed pressures: Businesses want scalable voice production without repeated studio sessions.

According to a 2024 report by Grand View Research, the global text-to-speech market is projected to grow at over 14% CAGR, driven largely by AI voice technologies. Voice cloning sits at the cutting edge of this transformation.

What Is AI Voice Cloning? A Clear Explanation

AI Voice Cloning vs Traditional Text-to-Speech

Traditional text-to-speech (TTS) systems convert text into spoken words using pre-built synthetic voices. While modern TTS sounds natural, it does not replicate a specific individual’s voice.

| Feature | Traditional TTS | AI Voice Cloning |

|---|---|---|

| Voice identity | Generic | Specific person |

| Training data | Large pre-recorded datasets | Custom audio samples |

| Personalization | Low | Very high |

| Business branding | Limited | Strong brand voice consistency |

Voice cloning, by contrast, focuses on identity replication. This makes it especially valuable for brands, public figures, educators, and content creators who want a consistent and recognizable voice.

Core Components of Voice Cloning Technology

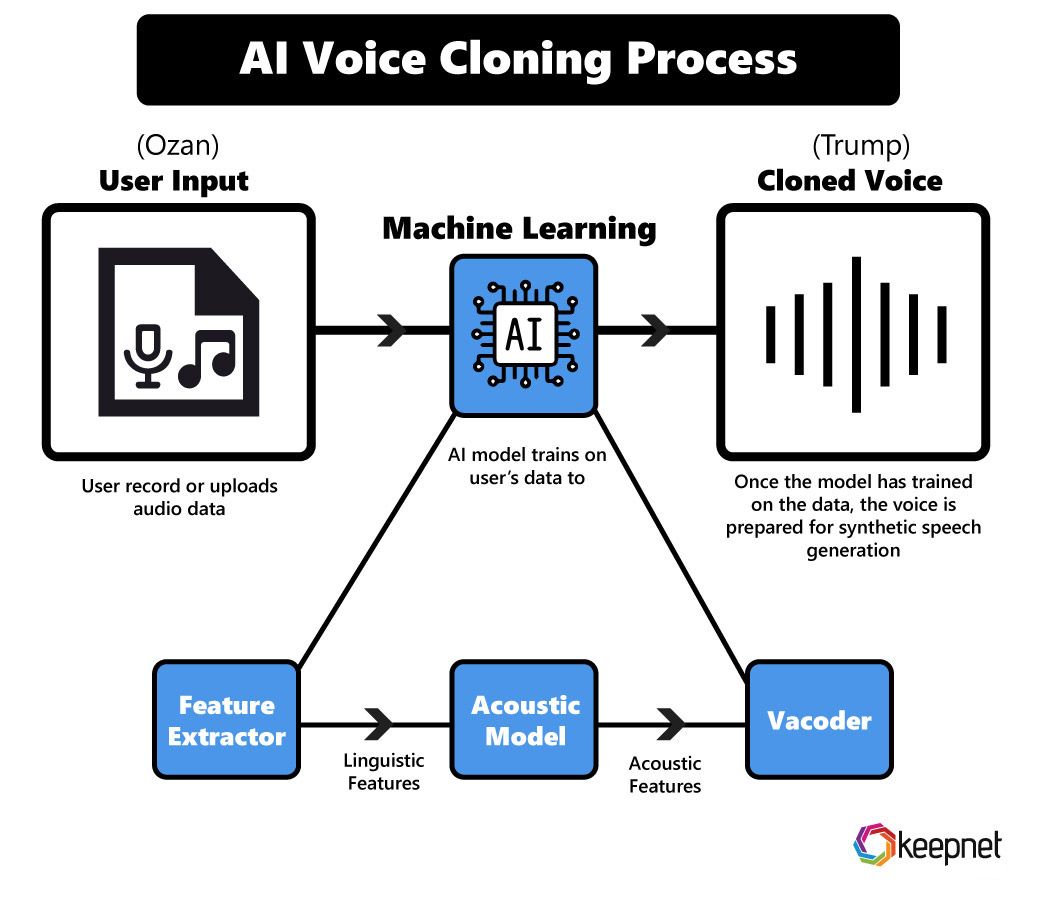

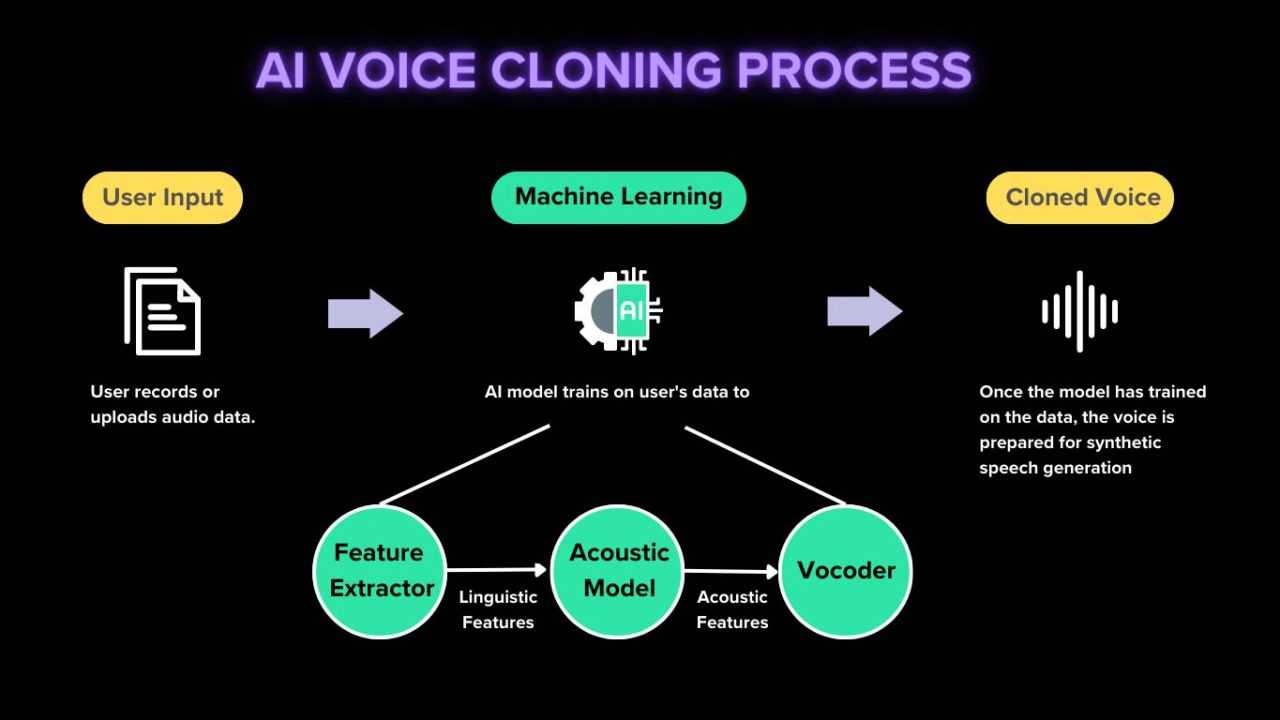

Behind the scenes, AI voice cloning relies on several advanced technologies working together:

- Automatic Speech Recognition (ASR): Converts audio into phonetic representations.

- Acoustic Models: Learn how sounds are produced by a specific voice.

- Neural Vocoders: Generate realistic waveforms from learned speech patterns.

- Transformer Models: Capture long-range dependencies in speech, improving natural flow.

As AI researcher Dr. Yoshua Bengio notes, “Modern neural networks can model subtle variations in human speech that were impossible just a decade ago.” This leap in modeling power is what makes high-quality voice cloning feasible today.

How AI Voice Is Generated from Audio: Step-by-Step

Step 1: Collecting Voice Samples

The first step to generate AI voice from audio is gathering voice recordings. Quality matters more than quantity, but both influence results.

Typical requirements include:

- 5–30 minutes for basic voice cloning

- 1–2 hours for high-fidelity commercial models

- Clean audio with minimal background noise

For example, a podcast host recording in a quiet room will achieve far better results than phone-call audio with compression artifacts.

Step 2: Audio Preprocessing and Feature Extraction

Before training, the audio is cleaned and standardized. This stage removes background noise, normalizes volume, and splits speech into manageable segments.

The AI then converts audio into features such as spectrograms, which visually represent sound frequencies over time. These features allow neural networks to “see” speech patterns mathematically.

Step 3: Training the Voice Model

During training, the AI learns how the speaker pronounces sounds, varies pitch, and transitions between words. Deep neural networks adjust millions of parameters to minimize the difference between generated and real speech.

Training time can range from minutes to hours depending on:

- Model complexity

- Amount of training data

- Computational resources

Commercial platforms often handle this automatically, making voice cloning accessible even to non-technical users.

Step 4: Voice Synthesis and Output

Once trained, the model can generate speech from any text input. Advanced systems allow control over speed, tone, pauses, and even emotional expression.

For businesses, this means producing consistent voice content on demand, without re-recording every update or localization.

Types of AI Voice Cloning Technologies

Zero-Shot Voice Cloning

Zero-shot voice cloning can generate a voice from just a few seconds of audio. While impressive, it often sacrifices accuracy and emotional nuance.

This approach is useful for experimentation or quick demos but is rarely suitable for professional branding.

Few-Shot Voice Cloning

Few-shot systems require several minutes of audio and strike a balance between speed and quality. Many commercial tools fall into this category.

They are popular for:

- Marketing narration

- Online courses

- Internal business content

Custom-Trained Voice Models

Custom voice cloning involves training a dedicated model with extensive voice data. This delivers the highest realism and consistency but requires higher investment.

Large enterprises often choose this route for brand ambassadors, virtual assistants, or long-term content strategies.

In the next section of this guide, we will explore real-world applications, benefits, ethical considerations, and how to choose the right AI voice solution for your needs.

Real-World Applications of AI Voice Cloning

Business and Marketing Use Cases

For businesses, the ability to generate AI voice from audio unlocks a new level of consistency and scalability. Brands can create a recognizable voice identity that remains uniform across ads, explainer videos, onboarding materials, and customer touchpoints.

Common business applications include:

- Branded voice assistants and chatbots

- Marketing videos and product demos

- Automated customer support responses

- Internal training and onboarding narration

A 2023 survey by PwC found that companies using AI-driven automation in customer experience reduced operational costs by up to 30%, while improving response speed and consistency. Voice cloning plays a key role in this shift.

Content Creation and Media Production

Content creators are among the fastest adopters of AI voice cloning. YouTubers, podcasters, and media studios use cloned voices to scale production without sacrificing personality.

Real-world examples include:

- Generating multilingual narrations without re-recording

- Maintaining a consistent voice during illness or travel

- Producing audiobooks faster and more affordably

As audio-first platforms continue to grow, AI-generated voices are becoming a practical creative tool rather than a novelty.

Education and E-Learning

In education, voice cloning enables personalized and accessible learning experiences. Educators can create consistent, high-quality narration for lessons, while students benefit from clear, engaging audio content.

- Personalized AI tutors

- Language learning with native-like pronunciation

- Accessible content for visually impaired learners

Localization and Global Expansion

Voice cloning also simplifies localization. Businesses can generate the same voice in multiple languages, preserving tone and brand identity across regions. This is especially valuable for global marketing and software products.

Key Benefits of Generating AI Voice from Audio

Cost Efficiency and Scalability

Traditional voice recording requires studios, talent scheduling, and repeated sessions. AI voice cloning reduces these costs dramatically by allowing unlimited generation once a model is trained.

Time Savings

Updates that once required re-recording can now be completed in minutes. This speed is critical for fast-moving marketing campaigns and agile product teams.

Brand Consistency

A cloned voice ensures that tone, pacing, and pronunciation remain consistent across all content, reinforcing brand recognition.

Accessibility and Inclusion

AI-generated voices make information more accessible, supporting users with reading difficulties or visual impairments and enabling inclusive digital experiences.

Limitations and Challenges of AI Voice Cloning

Dependence on Audio Quality

Even the most advanced AI cannot fully compensate for poor-quality input. Noisy or inconsistent recordings reduce realism and intelligibility.

Emotional Depth and Context

While modern models handle intonation well, conveying deep emotional nuance remains challenging, particularly in complex storytelling scenarios.

Ethical and Legal Risks

Voice cloning raises serious ethical questions. Without clear consent, cloning someone’s voice can lead to misuse, impersonation, or fraud.

- Voice ownership and consent

- Deepfake risks

- Regulatory compliance

According to MIT Technology Review, responsible deployment of voice AI must prioritize transparency and user consent to maintain trust.

Ethical Use of AI Voice Cloning

Consent and Ownership

Ethical voice cloning begins with explicit permission from the voice owner. Businesses should document consent and define usage boundaries clearly.

Transparency in AI-Generated Content

Audiences deserve to know when a voice is AI-generated. Clear disclosure builds trust and aligns with emerging global AI guidelines.

Responsible Adoption for Businesses

Organizations should implement governance policies to prevent misuse and ensure AI voice tools align with legal and ethical standards.

How to Choose the Right AI Voice Cloning Tool

Key Evaluation Criteria

- Voice realism and naturalness

- Language and accent support

- Customization and emotional control

- API and system integration

- Transparent pricing

Open-Source vs Commercial Solutions

Open-source tools offer flexibility but require technical expertise. Commercial platforms provide ease of use, support, and reliability, making them more suitable for most businesses.

Why Use AI.DuyThin.Digital to Research AI Voice Solutions

Trusted Reviews from Vietnam’s Leading AI Community

AI.DuyThin.Digital is built to help users navigate the complex AI landscape with clarity. Our platform delivers unbiased reviews based on real-world testing and expert analysis.

Feature Comparisons and Transparent Pricing

We compare AI voice tools side by side, highlighting strengths, limitations, and pricing so you can choose solutions that truly fit your needs.

Save Time and Make Confident Decisions

Instead of spending weeks researching scattered information, you gain a centralized, trustworthy resource to guide your AI adoption journey.

The Future of AI Voice Cloning Technology

Real-Time and Conversational Voice AI

Future systems will generate voices instantly, enabling more natural conversations with AI assistants.

Emotionally Aware Voice Models

Research is rapidly improving emotional expressiveness, bringing AI voices closer to human-level storytelling.

Multimodal AI Integration

Voice cloning will increasingly combine with visual avatars and interactive AI, creating immersive digital experiences.

Frequently Asked Questions (FAQ)

How much audio is needed for AI voice cloning?

Most tools require between 5 and 30 minutes of clean audio, while premium models benefit from longer recordings.

Is AI voice cloning legal?

Yes, when used with proper consent and compliance with local laws. Unauthorized cloning is illegal in many jurisdictions.

Can AI clone accents and emotions?

Accents are handled well; emotional nuance is improving but still limited compared to human actors.

Is AI voice cloning safe for business use?

When deployed responsibly with ethical safeguards, it is increasingly safe and widely adopted.

Conclusion: Key Takeaways

The ability to generate AI voice from audio is reshaping how we create, scale, and personalize spoken content. Voice cloning offers powerful advantages in efficiency, branding, and accessibility, while also demanding responsible and ethical use.

By understanding how the technology works and evaluating tools carefully, businesses and creators can unlock real value without unnecessary risk.

Next Steps

If you are exploring AI voice cloning or other AI solutions, visit AI.DuyThin.Digital to access in-depth reviews, feature comparisons, and transparent pricing insights. Save time on research and make smarter AI decisions with confidence.